AI in the cockpit

Flight UPS Airlines 1354 was a typical cargo flight at night. At 04:03 am, pilot Cerea Beal Jr. pushed the throttle forward and the Airbus A300 took off in completely normal style from the runway of the airport in Louisville in the U.S. state of Kentucky. The autopilot took Beal Jr. and co-pilot Shanda Fanning all the way to the landing position at the airport in Birmingham, Alabama. Because the main runway 6/24 was blocked that morning flight 1354 had to use runway 18 instead, which did not have a full instrument landing system (ILS) so that co-pilot Fanning had to manually enter the waypoints required for landing in the flight management computer in the correct sequence. While doing so she made a fatal mistake. No one in the cockpit reacted to the warnings indicated by the on-board computer either. “Oh my god,” Beal screamed when the aircraft crashed, broke into several parts, and went up in flames 1,480 meters (4,855 feet) in front of the regular runway 6/24. Both pilots were killed. Due to the information on the on-board voice recorder, the investigators concluded that the pilot and co-pilot were fatigued and unconcentrated.

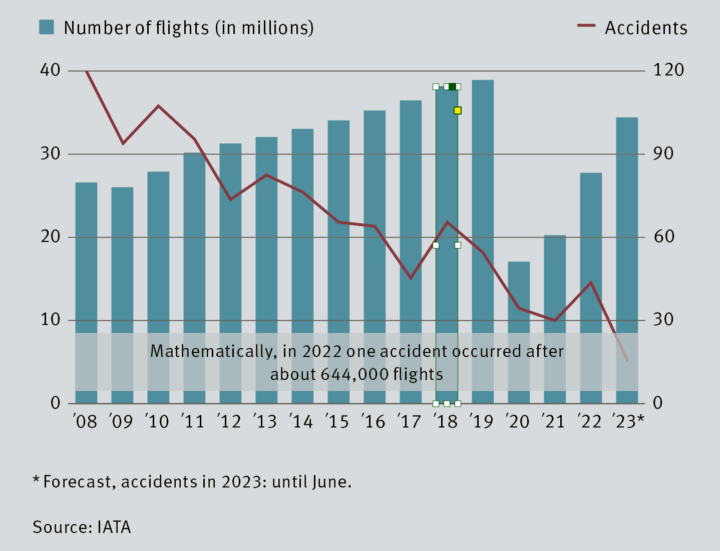

Although flying has become increasingly safe (see also the info element on page 32) microsleep, fatigue, and the related lack of concentration are still some of the main causes of aircraft accidents even ten years after the crash of UPS Airlines 1354. In a survey conducted by the European Cockpit Association (ECA) among 6,800 pilots from 31 countries 49.6 percent responded that in the four weeks before the survey they’d fallen asleep for several seconds one to four times. 20 percent of the accidents caused by human error can be attributed to pilots that had been on duty for ten hours or longer, according to a study by insurance company Allianz. One of the reasons was a shortage of cockpit crews. According to a study by Oliver Wyman consultants, almost 19,000 pilots are lacking worldwide. Both Airbus and Boeing expect around 500,000 new pilots to be needed in the near future. They can’t be “carved” but can perhaps be programmed.

Capturing, analyzing, and intervening

Modern jet aircraft are already capable of automated flying through turbulences today. However, the systems are adjusted to aerodynamic models and defined scenarios, so borderline situations require human intervention. The evolutionary leaps in AI technology are supposed to introduce even more digital autonomy into the cockpit.

Developers are pursuing several approaches in that regard. In addition to higher safety, efficiency and economy play a role. Dual staffing of flight decks with pilots and co-pilots will become increasingly difficult to achieve. The solution is single-pilot operations, i.e., one-man/one-woman crews with virtual co-pilots.

46 %

of all fatal aircraft crashes occur during final approach and landing. The takeoff phase (start, climb) accounts for 21 %, the cruise only for 9 %.

Source: Boeing, data acquisition period 2013–2022

As an entry-level solution on the way to single-pilot operations, AI-assisted assessments of pilot health and fitness to fly might be used. British start-up company Blueskeye AI, for instance, has developed software using face recognition and voice analysis to detect conditions such as fatigue, pain, depression, stress, or even anxiety. “The key thing is that we actually measure mental states, so we’re inferring from your sequence of actions and your behavior over time that the mind is becoming fatigued way before you start actually showing it by nodding off and closing your eyes, at which point it can be too late,” explained Blueskeye AI’s founder and CEO Michel Valstar. Beal and Fanning might not have taken off on their fatal flight if data had indicated that they weren’t fit to fly.

AI and automation experts at the Massachusetts Institute of Technology (MIT) are taking this to another level. The Air Guardian system being developed there is supposed to analyze pilots not only by means of eye tracking, and issue warnings in the event of unusual readings but, in case of an emergency, be able to assume control of the aircraft – as a virtual co-pilot.

AI must learn to fly

In the Next Generation Intelligent Cockpit (NICo) that researchers at the German Aerospace Center (DLR) are developing a virtual colleague is supposed to assist the captain as well. The requirements profile for such a digital assistant is huge because flying an airplane is a highly complex task. In addition to the extensive technology, extremely dynamic factors such as the current and future weather (electric storms, wakes, icing), traffic density in the air, and challenging takeoff and landing conditions must be included in decisions. Every flight is a calculation with an unpredictable number of unknowns.

Current AI systems are reaching their limits in view of such complexity. Their major problem is that they accumulate their knowledge in training stages and subsequently retrieve it. But how do you train that which is unpredictable? This, for instance, is where the liquid neural networks (LNN) come in that MIT uses with its Air Guardian. Such next-generation neural networks cannot only process large data volumes but also learn in real time, like receiving on-the-job training in a manner of speaking. The virtual co-pilot becomes increasingly experienced with each flight and each dangerous situation.

Flying is becoming safer and safer

But will the routines an AI system has learned suffice to intuitively take the right action? Like Captain Chesley “Sully” Sullenberger did, who against all probability made a safe emergency landing of the Airbus A320 of U.S. Airways flight 1549 on the Hudson River in New York City in January 2009 after all engines failed following bird-strike. All 155 people on board survived the incident. In his decision-making process, Sullenberger also used the stored experience of more than 20,000 flight hours. The future will show whether artificial intelligence systems will be able to intuitively act against probability as well.

The remote co-pilot

Remote co-pilots are an alternative to virtual co-pilots. That technology is supposed to enable a human co-pilot to remotely control and monitor an aircraft in real time even without being physically present in the cockpit. Due to advanced communication and control systems, the remote co-pilot can actively intervene in decision-making processes and assist in managing challenges.

A remote pilot on duty is sitting in front of a digital twin of the cockpit of the aircraft he or she is supposed to assume control of. A remote co-pilot can simultaneously handle several one-pilot operations because he or she only needs to intervene in emergencies. That’s why this is another solution that helps mitigate the personnel shortage. A technical challenge that still needs to be solved is this: “To enable real remote control of an aircraft from the ground in real time we need clearly better data connections in terms of stability, safety, and latency,” explains Christian Niermann, who works on remote co-pilots at the German Aerospace Center (DLR).

Focus on communication

Even at this juncture, indications are that artificial intelligence systems developed specifically for highly complex, dynamic environments such as airplanes are developing skills enabling them to flexibly adjust to volatile conditions. That applies to cockpit communications as well. For instance, due to their adaptability, real-time learning ability, and dynamic topology, MIT’s liquid neural network is said to be doing a very good job of understanding long text sequences in natural language and to even detect emotions in it. All of these are skills that raise the human-machine dialog to a new, simplifying level. That’s the consensus among all developers: Interaction between humans and AI must be as intuitive as possible. Nothing is more dangerous than increasing the stress level in a hazardous situation due to complicated operating processes.

“Our use of liquid neural networks offers a dynamic and adaptive approach, ensuring that AI does not replace human judgment, but complements it, leading to greater security and collaboration in the skies.”

Ramin Hasani, AI expert at Massachusetts Institute of Technology

Communication is increasingly important on another level as well: The growing air traffic plus the increasing risks caused by weather phenomena require more intensive connectivity between aircraft and their environments. This is where AI can help pilots operate on-board systems and in making necessary decisions to safely continue a flight or to safely abort it. That’s particularly relevant during take-offs and landings, which are the most stressful and dangerous flight stages.

The system is supposed to not only capture the currently prevailing external conditions such as weather and traffic situations but also check it for plausibility. Monitoring of air data systems plays an important role due to their exposure to external environmental conditions and influences, which may lead to problems due to icing, dirt accumulation, or bird-strike. To increase the robustness of future automatic functions against system errors (especially sensor errors), the virtual co-pilot is supposed to generate spare sensor readings from other existing sensors by means of analytical redundancy. Flaws in sensor data that for instance in October 2018 in conjunction with inadequate training and familiarity of the crew with the connected MCAS flight assistance system led to the crash of a Lion Air Boeing aircraft would likely be noticed and trigger corresponding emergency scenarios. “This system represents the innovative approach to human-centered AI in aviation. Our use of liquid neural networks offers a dynamic and adaptive approach, ensuring that AI does not replace human judgment, but complements it, leading to greater security and collaboration in the skies,” emphasizes Ramin Hasani, who has co-developed the LNNs at MIT.

“An exciting feature of our method is its differentiability. Our cooperative layer and the entire end-to-end process can be trained. We specifically chose the causal continuous-depth neural network model because of its dynamic features in mapping attention. The Air Guardian system isn’t rigid; it can be adjusted based on the situation’s demands, ensuring a balanced partnership between human and machine,” says MIT researcher Lianhao Yin. AI-supported cooperative monitoring mechanisms could therefore be downsized for use in cars, drones, or robotics as versatile assistants. In other words, next-generation AI is on approach to landing.